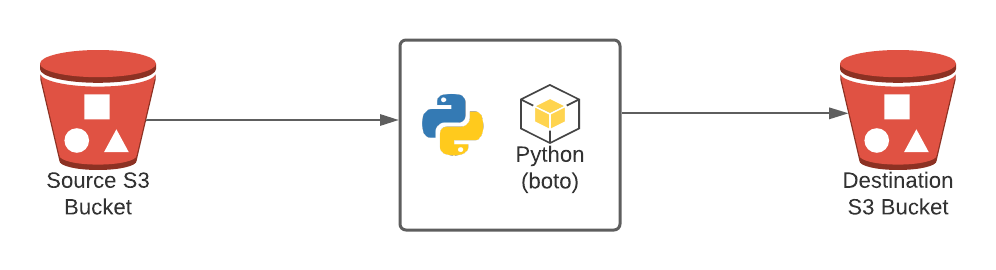

Today, we are going to discuss the implementation of copying files from one S3 bucket to another S3 bucket, and both the S3 bucket has different credentials. We will go step by step to implement this and then will explain the code.

import io

import boto3

S3_ARGS_SOURCE = {

'aws_access_key_id': "your_aws_access_key",

'aws_secret_access_key': "your_aws_secret_access_key",

'region_name': "region_name"

}

S3_ARGS_DEST = {

'aws_access_key_id': "your_aws_access_key"

'aws_secret_access_key': "your_aws_secret_access_key",

'region_name': "region_name"

}

# Initialize S3 resources

s3_dest = boto3.resource("s3", **S3_ARGS_DEST)

dest_bucket = s3_dest.Bucket("destination_bucket_name")

s3_source = boto3.resource("s3", **S3_ARGS_SOURCE)

source_bucket = s3_source.Bucket("source_bucket_name")

for obj in source_bucket.objects.filter(Prefix="test/"):

full_path = obj.key

response = obj.get()

file_content = io.BytesIO(response["Body"].read())

upload_obj = dest_bucket.Object(f"{full_path}")

upload_obj.upload_fileobj(file_content)

In the above code, first we are initialising the dictionary with source and destination S3 bucket credentials and then creating the resources for the bucket and initialising the bucket for both source and destination.

In the last section, we are iterating the objects of source bucket after filtering with prefix “test/”, you can skip this filtering part if you don’t want any filter.

Store the object content in file_content and then upload the object into destination bucket.

This above same code is a working example. If you face any issue or have suggestion, feel free to write your comments in comment box below.

Happy coding!!

Leave a Reply